Question: Why does everyone deep in AI sound completely unhinged?

Why does everyone deep in AI sound unhinged?

A few days ago I was having a conversation with a couple of colleagues and somehow, as it always does, the topic drifted to AI. Not the tools, not the research, not the actual work, but the people. The ones who are deep in it. The CEOs announcing we are months away from AGI. The researchers warning the machines will kill us all. The former employees who left to "pursue other opportunities" and are now on Substack explaining why we have eighteen months to live. Someone at the table asked the question out loud and we all just sat there for a second nodding, because it is a genuinely good question. Why does everyone deep in AI sound completely unhinged? I thought about it for a few days and I think it is worth sharing my take, because I keep seeing this question pop up everywhere and the real answer is more interesting than "they are just weird."

Short answer: incentives. And in the case of the doomers, also incentives, but dressed in a turtleneck and carrying a philosophy degree they have definitely mentioned unprompted at a dinner party.

Let me explain both, because this particular circus has two rings and a parking lot full of food trucks that are also somehow part of the problem. Let us start with the hype side.

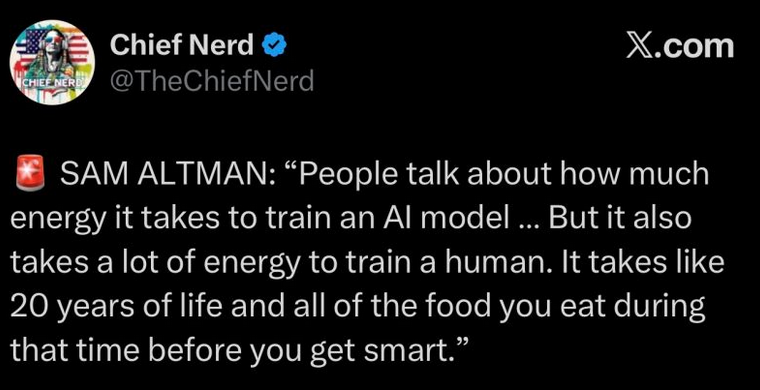

The CEOs are not crazy. The incentive environment just rewards behaving like they are. If you post something measured and nuanced about your AI product, three people read it and one of them is your mom. If you say "AI will replace all software engineers by 2027," it spreads faster than a layoff rumor on a Friday afternoon, except at Amazon where that is not a rumor, it is a quarterly tradition with its own internal wiki page. Extreme predictions generate media pickup, investor excitement, founder mythology, and a very specific kind of talent FOMO that makes brilliant people relocate their entire lives for a company that has not turned a profit and probably never will. A calm, accurate post about transformer scaling laws does not trend on LinkedIn. It sits there quietly being correct while nobody cares.

The valuation math makes this worse. Most frontier AI companies are valued on future potential, not current revenue, which means the bigger the claimed transformation, the bigger the total addressable market. Frame AI as incremental productivity tooling and you justify a modest SaaS multiple. Frame it as restructuring civilization as we know it and suddenly you are not a software company, you are an inevitability. You are not selling a product. You are selling destiny. Destiny gets a much better PE.

Then there is the founder archetype problem. Tech culture has a type and that type does not hedge. Grand predictions are part of the brand. Being measured and cautious is professional suicide in a media environment that rewards boldness and punishes nuance. Even when the prediction is completely wrong, which it frequently is, the boldness itself reinforces the visionary narrative. Accuracy is for academics. Vision is for magazine covers and All-In podcast appearances where everyone agrees with each other for two hours and calls it debate.

Mission inflation is also just recruiting with better marketing. Top talent is scarce and everyone knows it. If your CEO frames the company as building AGI and reshaping what it means to be human, that attracts a different caliber of candidate than "we are optimizing enterprise workflow automation for mid-market logistics companies." Nobody moves their family across the country for workflow optimization. They move for civilization-scale missions, even when the actual product is a glorified chatbot that summarizes Slack threads and occasionally invents a meeting that never happened.

But the CEOs are only one part of the hype ecosystem. They have a whole supporting cast and that cast is somehow worse.

There are the LinkedIn AI influencers, who appeared overnight sometime around late 2022 and have been posting daily ever since about how AI will transform everything, disrupt everything, and unlock your full potential as a human being, usually with a carousel that has eleven slides and ends with "follow me for more." These people did not exist before ChatGPT launched. They have no verifiable background in AI, machine learning, or anything adjacent. What they do have is a Canva subscription, a very confident tone, and an uncanny ability to repackage whatever OpenAI announced last week as their own original insight. They are not experts. They are fast.

Then there are the parasitic consultants, the ones who have pivoted their entire professional identity to AI in the time it takes most people to update their LinkedIn headline. Former PMs who now claim deep AI expertise based on having used ChatGPT to write their OKRs. Strategy consultants who attended one AI summit and are now available for your transformation journey at a very reasonable day rate. They are operating in a market where nobody has figured out how to verify the credentials yet, and they are making excellent use of the window. They will be pivoting to whatever comes next in about eighteen months.

And then there is the part nobody wants to say out loud. Some of the most alarming AI doom rhetoric comes from the same CEOs doing all of the above. If you loudly announce that AI is incredibly powerful and potentially catastrophic, you position yourself as a necessary policy stakeholder, you signal that you are a serious frontier lab, and you help create barriers to entry that smaller competitors cannot clear. That is a business strategy, and if you are thinking otherwise, you are naive. The worried face is important. You have to really commit to it.

Now let us cross to the other ring, which has significantly more 🤡.

The doomer ecosystem is an entire food chain and it is worth understanding who is actually in it before you take any of it seriously.

At the top you have the sincere believers. Researchers, philosophers, rationalists, and a rotating cast of former AI company employees who left under circumstances publicly described as "to pursue other opportunities," which in this industry means something happened and nobody is allowed to say what. They are genuinely convinced we are either months or decades away from building a superintelligence that will, depending on who you ask, paperclip the universe, subjugate humanity, or simply make us all feel very irrelevant at dinner parties. The timeline varies. The existential dread is consistent. These people are at least honest about what they believe, which makes them the most tolerable group in this ecosystem, which is not saying much.

Then you have the academics, who have their own incentive problem that nobody in academia likes to talk about. Researchers who work on AI safety get funding, attention, and conference invitations proportional to how seriously people take the problem they are studying. If AI safety is a minor inconvenience that some clever engineers will sort out over a long weekend, the whole field loses its urgency and more importantly its grant money. If AI safety is an existential civilizational challenge requiring the smartest people alive working on it with extreme urgency right now, you get a very different funding environment and a much better book deal. This is not a conspiracy. It is just how research ecosystems work. The bigger the problem, the bigger the field, the nicer the campus office.

Then there are the grifters, and this is where things get genuinely annoying. These are the people who figured out that AI doom is a content vertical. They are selling courses on how to survive the AI apocalypse. They are writing Substack newsletters with subject lines like "what they are not telling you" as if they have access to information that AI companies are hiding. They are on YouTube explaining, in a very calm and authoritative voice, why your job will not exist in eighteen months, right before the ad read for a productivity app that uses AI to organize your calendar. They do not care if they are right. Being right was never the product. Fear is the product and fear converts extremely well.

And then at the bottom, doing the most damage with the least self-awareness, you have the professional contrarians. These are the people who discovered that having a Very Strong Opinion about AI, specifically a negative one, is a reliable way to feel intellectually superior in any room. They have not read the papers. They have not used the tools in any serious way. But they have absolutely clocked that dunking on AI hype gets engagement from people who are tired of AI hype, and engagement is all they were ever after. They are not worried about superintelligence. They are worried about their follower count, and AI skepticism is just the current vehicle.

All of the above, by the way, share what I will call the prophet problem. Once you have publicly predicted something catastrophic and that prediction has been shared ten thousand times and you have built an entire audience around it, walking it back requires you to say "I was wrong about the most important thing I have ever said in public." That is not a sentence most humans find easy to form. So the timeline slips. The goalposts move. The catastrophe is always arriving, just slightly later than previously announced, for reasons that are very technical and that you would not understand.

Now here is the uncomfortable part for both camps. When you sit inside exponential model curves, watch scaling improvements happen daily, and are surrounded by breakthroughs that would have seemed like science fiction five years ago, it genuinely distorts your sense of what is possible and how fast it arrives. The hype side looks at the curve and concludes everything will be amazing very soon. The doomer looks at the same curve and concludes everything will be catastrophic very soon. They are both looking at the same exponential. They just have different feelings about what is waiting at the top, and wildly different Slack notification settings.

Prediction accuracy in tech has historically been terrible in both directions. IBM thought mainframes would rule forever. Meta bet billions on a metaverse that turned out to be an empty virtual office park with surprisingly good hand tracking and zero people in it. Tesla said self-driving cars were around the corner for about fifteen consecutive years. The AI doomers of the 1980s predicted superintelligence by the mid-nineties, then adjusted, then adjusted again, and are still adjusting. Bold forecasts sell. Cautionary tales also sell, but only when they are scary enough to justify the book tour. Delivery is always slower and messier and weirder than anyone on stage admitted, in either direction.

If you want the grounded view, stop reading CEO announcements and stop doom-scrolling through rationalist Substack threads at eleven at night. Stop following the carousel people. The truth about what AI actually is and what it is actually doing lives in the integration work, in the gap between what was promised on stage and what actually runs in production, in the pile of half-finished internal tools that nobody uses because the outputs are inconsistent on Tuesdays and completely hallucinated on Thursdays.

The future has a long history of arriving exactly as weird and slow and anticlimactic as it wants to, completely indifferent to anyone's predictions, funding rounds, or subscriber counts.

It always shows up eventually. Just not on schedule.

💫 For more hinged analysis of the people making unhinged claims, subscribe.